ETHICAL DONATORS

AND COMMUNITY MEMBERS REQUIRED, TO FILL THIS

SPACE WITH YOUR POLITICAL SLOGANS, ADVERTISING OFFERS,WEBSITE DETAILS, CHARITY REQUESTS, LECTURE

OPPORTUNITIES, EDUCATIONAL WORKSHOPS, SPIRITUAL

AND/OR HEALTH ENLIGHTENMENT COURSES.

AS AN IMPORTANT MEMBER OF THE

GLOBAL INDEPENDENT

MEDIA COMMUNITY, MIKIVERSE SCIENCE HONOURABLY REQUESTS YOUR HELP TO

KEEP YOUR NEWS, DIVERSE,

AND FREE OF CORPORATE, GOVERNMENT SPIN AND

CONTROL. FOR MORE INFORMATION ON HOW YOU MAY ASSIST, PLEASE CONTACT:

themikiverse@gmail.com

Monday, March 25, 2013

RUPERT SHELDRAKE AT EU 2013—"SCIENCE SET FREE"

Labels:

100% Independent Australian News,

mikiverse,

mikiverse headline news,

Mikiverse Hip Hop,

Mikiverse Law,

Mikiverse Politics,

Mikiverse Science

RUPERT SHELDRAKE - THE SCIENCE DELUSION BANNED TED TALK

ETHICAL DONATORS

AND COMMUNITY MEMBERS REQUIRED, TO FILL THIS

SPACE WITH YOUR POLITICAL SLOGANS, ADVERTISING OFFERS,WEBSITE DETAILS, CHARITY REQUESTS, LECTURE

OPPORTUNITIES, EDUCATIONAL WORKSHOPS, SPIRITUAL

AND/OR HEALTH ENLIGHTENMENT COURSES.

AS AN IMPORTANT MEMBER OF THE

GLOBAL INDEPENDENT

MEDIA COMMUNITY, MIKIVERSE SCIENCE HONOURABLY REQUESTS YOUR HELP TO

KEEP YOUR NEWS, DIVERSE,

AND FREE OF CORPORATE, GOVERNMENT SPIN AND

CONTROL. FOR MORE INFORMATION ON HOW YOU MAY ASSIST, PLEASE CONTACT:

themikiverse@gmail.com

Labels:

100% Independent Australian News,

mikiverse,

mikiverse headline news,

Mikiverse Hip Hop,

Mikiverse Law,

Mikiverse Politics,

Mikiverse Science

Tuesday, March 19, 2013

THE BIRTH OF OPTOGENETICS

An account of the path to realizing tools for controlling brain circuits with light

By late spring 2000, however, I had become fascinated by a simpler and potentially easier-to-implement approach: using naturally occurring microbial opsins, which would pump ions into or out of neurons in response to light. Opsins had been studied since the 1970s because of their fascinating biophysical properties, and for the evolutionary insights they offer into how life forms use light as an energy source or sensory cue.[1. D. Oesterhelt, W. Stoeckenius, “Rhodopsin-like protein from the purple membrane of Halobacterium halobium,” Nat New Biol, 233:149-52, 1971.] These membrane-spanning microbial molecules—proteins with seven helical domains—react to light by transporting ions across the lipid membranes of cells in which they are genetically expressed. (See the illustration above.) For this strategy to work, an opsin would have to be expressed in the neuron’s lipid membrane and, once in place, efficiently perform this ion-transport function. One reason for optimism was that bacteriorhodopsin had successfully been expressed in eukaryotic cell membranes—including those of yeast cells and frog oocytes—and had pumped ions in response to light in these heterologous expression systems. And in 1999, researchers had shown that, although many halorhodopsins might work best in the high salinity environments in which their host archaea naturally live (i.e., in very high chloride concentrations), a halorhodopsin from Natronomonas pharaonis (Halo/NpHR) functioned best at chloride levels comparable to those in the mammalian brain.[2. D. Okuno et al., "Chloride concentration dependency of the electrogenic activity of halorhodopsin," Biochemistry, 38:5422-29, 1999.]

I was intrigued by this, and in May 2000 I e-mailed the opsin pioneer Janos Lanyi, asking for a clone of the N. pharaonis

halorhodopsin, for the purpose of actively controlling neurons with

light. Janos kindly asked his collaborator Richard Needleman to send it

to me. But the reality of graduate school was setting in: unfortunately,

I had already left Stanford for the summer to take a neuroscience class

at the Marine Biology Laboratory in Woods Hole. I asked Richard to send

the clone to Karl. When I returned to Stanford in the fall, I was so

busy learning all the skills I would need for my thesis work on motor

control that the opsin project took a backseat for a while.

In February 2004, I proposed to Karl that we contact Georg to see if they had constructs they were willing to distribute. Karl got in touch with Georg in March, obtained the construct, and inserted the gene into a neural expression vector. Georg had made several further advances by then: he had created fusion proteins of ChR2 and yellow fluorescent protein, in order to monitor ChR2 expression, and had also found a ChR2 mutant with improved kinetics. Furthermore, Georg commented that in cell culture, ChR2 appeared to require little or no chemical supplementation in order to operate (in microbial opsins, the chemical chromophore all-trans-retinal must be attached to the protein to serve as the light absorber; it appeared to exist at sufficient levels in cell culture).

Finally, we were getting the ball rolling on targetable control of specific neural types. Karl optimized the gene expression conditions, and found that neurons could indeed tolerate ChR2 expression. Throughout July, working in off-hours, I debugged the optics of the Tsien-lab rig that I had often used in the past. Late at night, around 1 a.m. on August 4, 2004, I went into the lab, put a dish of cultured neurons expressing ChR2 into the microscope, patch-clamped a glowing neuron, and triggered the program that I had written to pulse blue light at the neurons. To my amazement, the very first neuron I patched fired precise action potentials in response to blue light. That night I collected data that demonstrated all the core principles we would publish a year later in Nature Neuroscience, announcing that ChR2 could be used to depolarize neurons.[5. E.S. Boyden et al., "Millisecond-timescale, genetically targeted optical control of neural activity," Nat Neurosci, 8:1263-68, 2005.] During that long, exciting first night of experimentation in 2004, I determined that ChR2 was safely expressed and physiologically functional in neurons. The neurons tolerated expression levels of the protein that were high enough to mediate strong neural depolarizations. Even with brief pulses of blue light, lasting just a few milliseconds, the magnitude of expressed-ChR2 photocurrents was large enough to mediate single action potentials in neurons, thus enabling temporally precise driving of spike trains. Serendipity had struck—the molecule was good enough in its wild-type form to be used in neurons right away. I e-mailed Karl, “Tired, but excited.” He shot back, “This is great!!!!!”

Transitions and optical neural silencers

In January 2005, Karl finished his postdoc and became an assistant professor of bioengineering and psychiatry at Stanford. Feng Zhang, then a first-year graduate student in chemistry (and now an assistant professor at MIT and at the Broad Institute), joined Karl’s new lab, where he cloned ChR2 into a lentiviral vector, and produced lentivirus that greatly increased the reliability of ChR2 expression in neurons. I was still working on my PhD, and continued to perform ChR2 experiments in the Tsien lab. Indeed, about half the ChR2 experiments in our first optogenetics paper were done in Richard Tsien’s lab, and I owe him a debt of gratitude for providing an environment in which new ideas could be pursued. I regret that, in our first optogenetics paper, we did not acknowledge that many of the key experiments had been done there. When I started working in Karl’s lab in late March 2005, we carried out experiments to flesh out all the figures for our paper, which appeared in Nature Neuroscience in August 2005, a year after that exhilarating first discovery that the technique worked.

Around

that same time, Guoping Feng, then leading a lab at Duke University

(and now a professor at MIT), began to make the first transgenic mice

expressing ChR2 in neurons.[6. B.R. Arenkiel et al., "In vivo

light-induced activation of neural circuitry in transgenic mice

expressing channelrhodopsin-2," Neuron, 54:205-18, 2007.]

Several other groups, including the Yawo, Herlitze, Landmesser, Nagel,

Gottschalk, and Pan labs, rapidly published papers demonstrating the use

of ChR2 in neurons in the months following.[7. T. Ishizuka et al.,

"Kinetic evaluation of photosensitivity in genetically engineered

neurons expressing green algae light-gated channels," Neurosci Res, 54:85-94, 2006.],[8.

X. Li et al., "Fast noninvasive activation and inhibition of neural and

network activity by vertebrate rhodopsin and green algae

channelrhodopsin," PNAS, 102:17816-21, 2005.],[9. G.

Nagel et al., "Light activation of channelrhodopsin-2 in excitable

cells of Caenorhabditis elegans triggers rapid behavioral responses," Curr Biol, 15:2279-84, 2005.],[10.

A. Bi et al., "Ectopic expression of a microbial-type rhodopsin

restores visual responses in mice with photoreceptor degeneration," Neuron,

50:23-33, 2006.] Clearly, the idea had been in the air, with many

groups chasing the use of channelrhodopsin in neurons. These papers

showed, among many other groundbreaking results, that no chemicals were

needed to supplement ChR2 function in the living mammalian brain.

Almost immediately after I finished my PhD in October 2005, two months after our ChR2 paper came out, I began the faculty job search process. At the same time, I started a position as a postdoctoral researcher with Karl and with Mark Schnitzer at Stanford. The job-search process ended up consuming much of my time, and being on the road, I began doing bioengineering invention consulting in order to learn about other new technology areas that could be brought to bear on neuroscience. I accepted a faculty job offer from the MIT Media Lab in September 2006, and began the process of setting up a neuroengineering research group there.

Around that time, I began a collaboration with Xue Han, my then girlfriend (and a postdoctoral researcher in the lab of Richard Tsien), to revisit the original idea of using the N. pharaonis halorhodopsin to mediate optical neural silencing. Back in 2000, Karl and I had planned to pursue this jointly; there was now the potential for competition, since we were working separately. Xue and I ordered the gene to be synthesized in codon-optimized form by a DNA synthesis company, and, using the same Tsien-lab rig that had supported the channelrhodopsin paper, Xue acquired data showing that this halorhodopsin could indeed silence neural activity. Our paper[11. X. Han, E.S. Boyden, "Multiple-color optical activation, silencing, and desynchronization of neural activity, with single-spike temporal resolution," PLoS ONE, 2:e299, 2007.] appeared in the March 2007 issue of PLoS ONE; Karl’s group, working in parallel, published a paper in Nature a few weeks later, independently showing that this halorhodopsin could support light-driven silencing of neurons, and also including an impressive demonstration that it could be used to manipulate behavior in Caenorhabditis elegans.[12. F. Zhang et al., "Multimodal fast optical interrogation of neural circuitry," Nature, 446:633-39, 2007.] Later, both our groups teamed up to file a joint patent on the use of this halorhodopsin to silence neural activity. As a testament to the unanticipated side effects of following innovation where it leads you, Xue and I got married in 2009 (and she is now an assistant professor at Boston University).

I continued to survey a wide variety of microorganisms for better silencing opsins: the inexpensiveness of gene synthesis meant that it was possible to rapidly obtain genes codon-optimized for mammalian expression, and to screen them for new and interesting light-drivable neural functions. Brian Chow (now an assistant professor at the University of Pennsylvania) joined my lab at MIT as a postdoctoral researcher, and began collaborating with Xue. In 2008 they identified a new class of neural silencer, the archaerhodopsins, which were not only capable of high-amplitude neural silencing—the first such opsin that could support 100 percent shutdown of neurons in the awake, behaving animal—but also were capable of rapid recovery after having been illuminated for extended durations, unlike halorhodopsins, which took minutes to recover after long-duration illumination.[13. B.Y. Chow et al., "High-performance genetically targetable optical neural silencing by light-driven proton pumps," Nature, 463:98-102, 2010.] Interestingly, the archaerhodopsins are light-driven outward pumps, similar to bacteriorhodopsin—they hyperpolarize neurons by pumping protons out of the cells. However, the resultant pH changes are as small as those produced by channelrhodopsins (which have proton conductances a million times greater than their sodium conductances), and well within the safe range of neuronal operation. Intriguingly, we discovered that the H. salinarum bacteriorhodopsin, the very first opsin characterized in the early 1970s, was able to mediate decent optical neural silencing, suggesting that perhaps opsins could have been applied to neuroscience decades ago.

For mammalian systems, viruses bearing genes encoding for opsins have proven popular in experimental use, due to their ease of creation and use. These viruses achieve their specificity either by infecting only specific neurons, or by containing regulatory promoters that constrain opsin expression to certain kinds of neurons.

An increasing number of transgenic mouse lines are also now being created, in which an opsin is expressed in a given neuron type through transgenic methodologies. One popular hybrid strategy is to inject a virus that contains a Cre-activated genetic cassette encoding for the opsin into one of the burgeoning number of mice that express Cre recombinase in specific neuron types, so that the opsin will only be produced in Cre recombinase-expressing neurons. [15. D.Atasoy et al., “A FLEX switch targets Channelrhodopsin-2 to multiple cell types for imaging and long-range circuit mapping,” J Neurosci, 28:7025-30, 2008.]

In 2009, in collaboration with the labs of Robert Desimone and Ann Graybiel at MIT, we published the first use of channelrhodopsin-2 in the nonhuman primate brain, showing that it could safely and effectively mediate neuron type–specific activation in the rhesus macaque without provoking neuron death or functional immune reactions. [16. X. Han et al., “Millisecond-Timescale Optical Control of Neural Dynamics in the Nonhuman Primate Brain,” Neuron, 62:191-98, 2009.] This paper opened up a possibility of translating the technique of optical neural stimulation into the clinic as a treatment modality, although clearly much more work is required to understand this potential application of optogenetics.

Edward Boyden leads the Synthetic Neurobiology Group at MIT,

where he is the Benesse Career Development Professor and associate

professor of biological engineering and brain and cognitive science at

the MIT Media Lab and the MIT McGovern Institute. This article is adapted from a review in F1000 Biology Reports, DOI:10.3410/B3-11 (open access at http://f1000.com/reports/b/3/11). For citation purposes, please refer to that version.

http://www.the-scientist.com/?articles.view/articleNo/30756/title/The-Birth-of-Optogenetics/

By Edward S. Boyden | July 1, 2011

Blue light hits a neuron engineered to

express opsin molecules on its surface, opening a channel through which

ions pass into the cell—activating the neuron.MIT McGovern Institute, Julie Pryor, Charles Jennings, Sputnik Animation, Ed Boyden

For a few years now, I’ve taught a

course at MIT called “Principles of Neuroengineering.” The idea of the

class is to get students thinking about how to create neurotechnology

innovations—new inventions that can solve outstanding scientific

questions or address unmet clinical needs. Designing neurotechnologies

is difficult because of the complex properties of the brain: its

inaccessibility, heterogeneity, fragility, anatomical richness, and high

speed of operation. To illustrate the process, I decided to write a

case study about the birth and development of an innovation with which I

have been intimately involved: optogenetics—a toolset of genetically

encoded molecules that, when targeted to specific neurons in the brain,

allow the activity of those neurons to be driven or silenced by light.A strategy: controlling the brain with light

As an undergraduate at MIT, I studied physics and electrical engineering and got a good deal of firsthand experience in designing methods to control complex systems. By the time I graduated, I had become quite interested in developing strategies for understanding and engineering the brain. After graduating in 1999, I traveled to Stanford to begin a PhD in neuroscience, setting up a home base in Richard Tsien’s lab. In my first year at Stanford I was fortunate enough to meet many nearby biologists willing to do collaborative experiments, ranging from attempting the assembly of complex neural circuits in vitro to behavioral experiments with rhesus macaques. For my thesis work, I joined the labs of Richard Tsien and of Jennifer Raymond in spring 2000, to study how neural circuits adapt in order to control movements of the body as the circumstances in the surrounding world change.In parallel, I started thinking about new technologies for controlling the electrical activity of specific neuron types embedded within intact brain circuits. That spring, I discussed this problem—during brainstorming sessions that often ran late into the night—with Karl Deisseroth, then a Stanford MD-PhD student also doing research in Tsien’s lab. We started to think about delivering stretch-sensitive ion channels to specific neurons, and then tethering magnetic beads selectively to the channels, so that applying an appropriate magnetic field would result in the bead’s moving and opening the ion channel, thus activating the targeted neurons.By late spring 2000, however, I had become fascinated by a simpler and potentially easier-to-implement approach: using naturally occurring microbial opsins, which would pump ions into or out of neurons in response to light. Opsins had been studied since the 1970s because of their fascinating biophysical properties, and for the evolutionary insights they offer into how life forms use light as an energy source or sensory cue.[1. D. Oesterhelt, W. Stoeckenius, “Rhodopsin-like protein from the purple membrane of Halobacterium halobium,” Nat New Biol, 233:149-52, 1971.] These membrane-spanning microbial molecules—proteins with seven helical domains—react to light by transporting ions across the lipid membranes of cells in which they are genetically expressed. (See the illustration above.) For this strategy to work, an opsin would have to be expressed in the neuron’s lipid membrane and, once in place, efficiently perform this ion-transport function. One reason for optimism was that bacteriorhodopsin had successfully been expressed in eukaryotic cell membranes—including those of yeast cells and frog oocytes—and had pumped ions in response to light in these heterologous expression systems. And in 1999, researchers had shown that, although many halorhodopsins might work best in the high salinity environments in which their host archaea naturally live (i.e., in very high chloride concentrations), a halorhodopsin from Natronomonas pharaonis (Halo/NpHR) functioned best at chloride levels comparable to those in the mammalian brain.[2. D. Okuno et al., "Chloride concentration dependency of the electrogenic activity of halorhodopsin," Biochemistry, 38:5422-29, 1999.]

|

Infographic: Part Human, Part HIV Credit: Lucy Reading-Ikkanda

|

The channelrhodopsin collaboration

In 2002 a pioneering paper from the lab of Gero Miesenböck showed that genetic expression of a three-gene Drosophila phototransduction cascade in neurons allowed the neurons to be excited by light, and suggested that the ability to activate specific neurons with light could serve as a tool for analyzing neural circuits.[3. B.V. Zemelman et al., "Selective photostimulation of genetically chARGed neurons," Neuron, 33:15-22, 2002.] But the light-driven currents mediated by this system were slow, and this technical issue may have been a factor that limited adoption of the tool.This paper was fresh in my mind when, in fall 2003, Karl e-mailed me to express interest in revisiting the magnetic-bead stimulation idea as a potential project that we could pursue together later—when he had his own lab, and I had finished my PhD and could join his lab as a postdoc. Karl was then a postdoctoral researcher in Robert Malenka’s lab (also at Stanford), and I was about halfway through my PhD. We explored the magnetic-bead idea between October 2003 and February 2004. Around that time I read a just-published paper by Georg Nagel, Ernst Bamberg, Peter Hegemann, and colleagues, announcing the discovery of channelrhodopsin-2 (ChR2), a light-gated cation channel and noting that the protein could be used as a tool to depolarize cultured mammalian cells in response to light.[4. G. Nagel et al., "Channelrhodopsin-2, a directly light-gated cation-selective membrane channel," PNAS, 100:13940-45, 2003.]In February 2004, I proposed to Karl that we contact Georg to see if they had constructs they were willing to distribute. Karl got in touch with Georg in March, obtained the construct, and inserted the gene into a neural expression vector. Georg had made several further advances by then: he had created fusion proteins of ChR2 and yellow fluorescent protein, in order to monitor ChR2 expression, and had also found a ChR2 mutant with improved kinetics. Furthermore, Georg commented that in cell culture, ChR2 appeared to require little or no chemical supplementation in order to operate (in microbial opsins, the chemical chromophore all-trans-retinal must be attached to the protein to serve as the light absorber; it appeared to exist at sufficient levels in cell culture).

Finally, we were getting the ball rolling on targetable control of specific neural types. Karl optimized the gene expression conditions, and found that neurons could indeed tolerate ChR2 expression. Throughout July, working in off-hours, I debugged the optics of the Tsien-lab rig that I had often used in the past. Late at night, around 1 a.m. on August 4, 2004, I went into the lab, put a dish of cultured neurons expressing ChR2 into the microscope, patch-clamped a glowing neuron, and triggered the program that I had written to pulse blue light at the neurons. To my amazement, the very first neuron I patched fired precise action potentials in response to blue light. That night I collected data that demonstrated all the core principles we would publish a year later in Nature Neuroscience, announcing that ChR2 could be used to depolarize neurons.[5. E.S. Boyden et al., "Millisecond-timescale, genetically targeted optical control of neural activity," Nat Neurosci, 8:1263-68, 2005.] During that long, exciting first night of experimentation in 2004, I determined that ChR2 was safely expressed and physiologically functional in neurons. The neurons tolerated expression levels of the protein that were high enough to mediate strong neural depolarizations. Even with brief pulses of blue light, lasting just a few milliseconds, the magnitude of expressed-ChR2 photocurrents was large enough to mediate single action potentials in neurons, thus enabling temporally precise driving of spike trains. Serendipity had struck—the molecule was good enough in its wild-type form to be used in neurons right away. I e-mailed Karl, “Tired, but excited.” He shot back, “This is great!!!!!”

Transitions and optical neural silencers

In January 2005, Karl finished his postdoc and became an assistant professor of bioengineering and psychiatry at Stanford. Feng Zhang, then a first-year graduate student in chemistry (and now an assistant professor at MIT and at the Broad Institute), joined Karl’s new lab, where he cloned ChR2 into a lentiviral vector, and produced lentivirus that greatly increased the reliability of ChR2 expression in neurons. I was still working on my PhD, and continued to perform ChR2 experiments in the Tsien lab. Indeed, about half the ChR2 experiments in our first optogenetics paper were done in Richard Tsien’s lab, and I owe him a debt of gratitude for providing an environment in which new ideas could be pursued. I regret that, in our first optogenetics paper, we did not acknowledge that many of the key experiments had been done there. When I started working in Karl’s lab in late March 2005, we carried out experiments to flesh out all the figures for our paper, which appeared in Nature Neuroscience in August 2005, a year after that exhilarating first discovery that the technique worked.

|

CHANNELRHODOPSINS

IN ACTION <br> A neuron expresses the light-gated cation channel

channelrhodopsin-2 (green dots on the cell body) in its cell membrane

(1). The neuron is illuminated by a brief pulse of blue light a few

milliseconds long, which opens the channelrhodopsin-2 molecules (2),

allowing positively charged ions to enter the cells, and causing the

neuron to fire an electrical pulse (3). A neural network containing

different kinds of cells (pyramidal cell, basket cell, etc.), with the

basket cells (small star-shaped cells) selectively sensitized to light

activation. When blue light hits the neural network, the basket cells

fire electrical pulses (white highlights), while the surrounding neurons

are not directly affected by the light (4). The basket cells, once

activated, can, however, modulate the activity in the rest of the

network.

Watch Video

Credit: MIT McGovern Institute, Julie Pryor, Charles Jennings, Sputnik Animation, Ed Boyden

|

Almost immediately after I finished my PhD in October 2005, two months after our ChR2 paper came out, I began the faculty job search process. At the same time, I started a position as a postdoctoral researcher with Karl and with Mark Schnitzer at Stanford. The job-search process ended up consuming much of my time, and being on the road, I began doing bioengineering invention consulting in order to learn about other new technology areas that could be brought to bear on neuroscience. I accepted a faculty job offer from the MIT Media Lab in September 2006, and began the process of setting up a neuroengineering research group there.

Around that time, I began a collaboration with Xue Han, my then girlfriend (and a postdoctoral researcher in the lab of Richard Tsien), to revisit the original idea of using the N. pharaonis halorhodopsin to mediate optical neural silencing. Back in 2000, Karl and I had planned to pursue this jointly; there was now the potential for competition, since we were working separately. Xue and I ordered the gene to be synthesized in codon-optimized form by a DNA synthesis company, and, using the same Tsien-lab rig that had supported the channelrhodopsin paper, Xue acquired data showing that this halorhodopsin could indeed silence neural activity. Our paper[11. X. Han, E.S. Boyden, "Multiple-color optical activation, silencing, and desynchronization of neural activity, with single-spike temporal resolution," PLoS ONE, 2:e299, 2007.] appeared in the March 2007 issue of PLoS ONE; Karl’s group, working in parallel, published a paper in Nature a few weeks later, independently showing that this halorhodopsin could support light-driven silencing of neurons, and also including an impressive demonstration that it could be used to manipulate behavior in Caenorhabditis elegans.[12. F. Zhang et al., "Multimodal fast optical interrogation of neural circuitry," Nature, 446:633-39, 2007.] Later, both our groups teamed up to file a joint patent on the use of this halorhodopsin to silence neural activity. As a testament to the unanticipated side effects of following innovation where it leads you, Xue and I got married in 2009 (and she is now an assistant professor at Boston University).

I continued to survey a wide variety of microorganisms for better silencing opsins: the inexpensiveness of gene synthesis meant that it was possible to rapidly obtain genes codon-optimized for mammalian expression, and to screen them for new and interesting light-drivable neural functions. Brian Chow (now an assistant professor at the University of Pennsylvania) joined my lab at MIT as a postdoctoral researcher, and began collaborating with Xue. In 2008 they identified a new class of neural silencer, the archaerhodopsins, which were not only capable of high-amplitude neural silencing—the first such opsin that could support 100 percent shutdown of neurons in the awake, behaving animal—but also were capable of rapid recovery after having been illuminated for extended durations, unlike halorhodopsins, which took minutes to recover after long-duration illumination.[13. B.Y. Chow et al., "High-performance genetically targetable optical neural silencing by light-driven proton pumps," Nature, 463:98-102, 2010.] Interestingly, the archaerhodopsins are light-driven outward pumps, similar to bacteriorhodopsin—they hyperpolarize neurons by pumping protons out of the cells. However, the resultant pH changes are as small as those produced by channelrhodopsins (which have proton conductances a million times greater than their sodium conductances), and well within the safe range of neuronal operation. Intriguingly, we discovered that the H. salinarum bacteriorhodopsin, the very first opsin characterized in the early 1970s, was able to mediate decent optical neural silencing, suggesting that perhaps opsins could have been applied to neuroscience decades ago.

Beyond luck: systematic discovery and engineering of optogenetic tools

An essential aspect of furthering this work is the free and open distribution of these optogenetic tools, even prior to publication. To facilitate teaching people how to use these tools, our lab regularly posts white papers on our website* with details on reagents and optical hardware (a complete optogenetics setup costs as little as a few thousand dollars for all required hardware and consumables), and we have also partnered with nonprofit organizations such as Addgene and the University of North Carolina Gene Therapy Center Vector Core to distribute DNA and viruses, respectively. We regularly host visitors to observe experiments being done in our lab, seeking to encourage the community building that has been central to the development of optogenetics from the beginning.As a case study, the birth of optogenetics offers a number of interesting insights into the blend of factors that can lead to the creation of a neurotechnological innovation. The original optogenetic tools were identified partly through serendipity, guided by a multidisciplinary convergence and a neuroscience-driven knowledge of what might make a good tool. Clearly, the original serendipity that fostered the formation of this concept, and that accompanied the initial quick try to see if it would work in nerve cells, has now given way to the systematized luck of bioengineering, with its machines and algorithms designed to optimize the chances of finding something new. Many labs, driven by genomic mining and mutagenesis, are reporting the discovery of new opsins with improved light and color sensitivities and new ionic properties. It is to be hoped, of course, that as this systematized luck accelerates, we will stumble upon more innovations that can aid in dissecting the enormous complexity of the brain—beginning the cycle of invention again.Putting the toolbox to work

These optogenetic tools are now in use by many hundreds of neuroscience and biology labs around the world. Opsins have been used to study how neurons contribute to information processing and behavior in organisms including C. elegans, Drosophila, zebrafish, mouse, rat, and nonhuman primate. Light sources such as conventional mercury and xenon lamps, light-emitting diodes, scanning lasers, femtosecond lasers, and other common microscopy equipment suffice for in vitro use.In vivo mammalian use of these optogenetic reagents has been greatly facilitated by the availability of inexpensive lasers with optical-fiber outputs; the free end of the optical fiber is simply inserted into the brain of the live animal when needed,[14. A.M. Aravanis et al., “An optical neural interface: in vivo control of rodent motor cortex with integrated fiberoptic and optogenetic technology,” J Neural Eng, 4:S143-56, 2007.] or coupled at the time of experimentation to an implanted optical fiber.For mammalian systems, viruses bearing genes encoding for opsins have proven popular in experimental use, due to their ease of creation and use. These viruses achieve their specificity either by infecting only specific neurons, or by containing regulatory promoters that constrain opsin expression to certain kinds of neurons.

An increasing number of transgenic mouse lines are also now being created, in which an opsin is expressed in a given neuron type through transgenic methodologies. One popular hybrid strategy is to inject a virus that contains a Cre-activated genetic cassette encoding for the opsin into one of the burgeoning number of mice that express Cre recombinase in specific neuron types, so that the opsin will only be produced in Cre recombinase-expressing neurons. [15. D.Atasoy et al., “A FLEX switch targets Channelrhodopsin-2 to multiple cell types for imaging and long-range circuit mapping,” J Neurosci, 28:7025-30, 2008.]

In 2009, in collaboration with the labs of Robert Desimone and Ann Graybiel at MIT, we published the first use of channelrhodopsin-2 in the nonhuman primate brain, showing that it could safely and effectively mediate neuron type–specific activation in the rhesus macaque without provoking neuron death or functional immune reactions. [16. X. Han et al., “Millisecond-Timescale Optical Control of Neural Dynamics in the Nonhuman Primate Brain,” Neuron, 62:191-98, 2009.] This paper opened up a possibility of translating the technique of optical neural stimulation into the clinic as a treatment modality, although clearly much more work is required to understand this potential application of optogenetics.

http://www.the-scientist.com/?articles.view/articleNo/30756/title/The-Birth-of-Optogenetics/

ETHICAL DONATORS

AND COMMUNITY MEMBERS REQUIRED, TO FILL THIS

SPACE WITH YOUR POLITICAL SLOGANS, ADVERTISING OFFERS,WEBSITE DETAILS, CHARITY REQUESTS, LECTURE

OPPORTUNITIES, EDUCATIONAL WORKSHOPS, SPIRITUAL

AND/OR HEALTH ENLIGHTENMENT COURSES.

AS AN IMPORTANT MEMBER OF THE

GLOBAL INDEPENDENT

MEDIA COMMUNITY, MIKIVERSE SCIENCE HONOURABLY REQUESTS YOUR HELP TO

KEEP YOUR NEWS, DIVERSE,

AND FREE OF CORPORATE, GOVERNMENT SPIN AND

CONTROL. FOR MORE INFORMATION ON HOW YOU MAY ASSIST, PLEASE CONTACT:

themikiverse@gmail.com

Labels:

100% Independent Australian News,

mikiverse,

mikiverse headline news,

Mikiverse Hip Hop,

Mikiverse Law,

Mikiverse Politics,

Mikiverse Science

Monday, March 18, 2013

A NOBEL PRIZE FOR THE DARK SIDE

”Science today is about getting some results, framing those results in an attention-grabbing media release and basking in the glory.”On October 4, 2011 the Nobel Prize in Physics was awarded to three astrophysicists for “THE ACCELERATING UNIVERSE.” Prof. Perlmutter of the University of California, Berkeley, has been awarded half the 10m Swedish krona (US$1,456,000 or £940,000) prize, with Prof. Schmidt of the Australian National University and Prof. Riess of Johns Hopkins University’s Space Telescope Science Institute sharing the other half. The notion of an accelerating expansion of the universe is based on observation of supernovae at high redshift, known as The High-Z SN Search.

—Kerry Cue, Canberra Times, 5 October 2011

Saul Perlmutter pictured with a view of the supernova 1987a in the background. Photo: Lawrence Berkeley National Laboratory

Brian Schmidt of the Australian National University. Photo by Belinda Pratten.

“The present boastfulness of the expounders and the gullibility of the listeners alike violate that critical spirit which is supposedly the hallmark of science.”I attended a public lecture recently on “Cosmological Confusion… revealing the common misconceptions about the big bang, the expansion of the universe and cosmic horizons,” presented at the Australian National University by an award winning Australian astrophysicist, Dr. Tamara Davis.

—Jacques Barzun, Science: the glorious entertainment

The

particular interests of Dr. Davis are the mysteries posed by ‘dark

matter’ and ‘dark energy,’ hence the title of this piece. The theatre

was packed and the speaker animated like an excited schoolchild who has

done her homework and is proud to show the class. Her first question to

the packed hall was, “How many in the audience have done some physics?”

It seemed the majority had. So it was depressing to listen to the

questions throughout the performance and recognize that the noted

cultural historian Jacques Barzun was right. Also, Halton Arp’s

appraisal of the effect of modern education seemed fitting, “If you take

a highly intelligent person and give them the best possible, elite

education, then you will most likely wind up with an academic who is

completely impervious to reality.”

The

particular interests of Dr. Davis are the mysteries posed by ‘dark

matter’ and ‘dark energy,’ hence the title of this piece. The theatre

was packed and the speaker animated like an excited schoolchild who has

done her homework and is proud to show the class. Her first question to

the packed hall was, “How many in the audience have done some physics?”

It seemed the majority had. So it was depressing to listen to the

questions throughout the performance and recognize that the noted

cultural historian Jacques Barzun was right. Also, Halton Arp’s

appraisal of the effect of modern education seemed fitting, “If you take

a highly intelligent person and give them the best possible, elite

education, then you will most likely wind up with an academic who is

completely impervious to reality.”Carl Linnaeus in 1758 showed characteristic academic hubris and anthropocentrism when he named our species Homo sapiens sapiens (“Sapiens” is Latin for “wise man” or ” knowing man”). But it is questionable, as a recent (18th August) correspondent to Nature wrote, whether we “merit a single ‘sapiens,’ let alone the two we now bear.” To begin, big bang cosmology dismisses the physics principle of no creation from nothing. It then proceeds with the falsehood that Hubble discovered the expansion of the universe. He didn’t, he found the apparent redshift/distance relationship (actually a redshift/luminosity relationship), which to his death he did not feel was due to an expanding universe.

This misrepresentation is followed by the false assumption that the evolution of an expanding universe can be deduced from Einstein’s unphysical theory of gravity, which combines two distinct concepts, space and time, into some ‘thing’ with four dimensions called “the fabric of space-time.” I should like to know what this “fabric” is made from and how matter can be made to shape it? Space is the concept of the relationship between objects in three orthogonal dimensions only. Time is the concept of the interval between events and has nothing to do with Einstein’s physical clocks. Clearly time has no physical dimension. David Harriman says, “A concept detached from reality can be like a runaway train, destroying everything in its path.” This is certainly true of Einstein’s theories of relativity.

Special relativity is no different to declaring that the apparent dwindling size of a departing train and the lower pitch of its whistle are due to a real shrinking of space on the train and slowing of its clocks. We know from experience that isn’t true. The farce must eventually play out like the cartoon character walking off the edge of a cliff and not falling until the realization dawns that there is no support. But how long must we wait? We are swiftly approaching the centennial of the big bang. The suspense has become tedious and it is costing us dearly. Some people are getting angry.

All of the ‘dark’ things in astronomy are artefacts of a crackpot cosmology. The ‘dark energy’ model of the universe demands that eventually all of the stars will disappear and there will be eternal darkness. In the words of Brian Schmidt, “The future for the universe appears very bleak.” He confirms my portrayal of big bang cosmology as “hope less.”

The Nobel Prize Committee had the opportunity to consider a number of rational arguments and evidence against an accelerating expanding universe:

1. General Relativity (GR) is wrong — we don’t understand gravity. Brian Schmidt mentions this possibility and labels it “heretical.” But GR must be wrong because space is not some ‘thing’ that can be warped mysteriously by the presence of matter. The math of GR explains nothing.

2. Supernovae are not understood. (Schmidt mentions this possibility too). This also should have been obvious because the theory is so complex and adjustable that it cannot predict anything. The model involving a sudden explosion of an accreting white dwarf is unverified and does not predict the link between peak luminosity and duration of supernovae type 1a ‘standard candles’ or the complex bipolar pattern of their remnants.

3. The universe is not expanding — Hubble was right. If the redshift is not simply a Doppler effect, “the region observed appears as a small, homogeneous, but insignificant portion of a universe extended indefinitely both in space and time.”

4. Concerning intrinsic redshift, Halton Arp and his colleagues long ago proved that there is, as Hubble wrote, “a new principle of nature,” to be discovered.

5. There can be no ‘dark energy’ in ‘empty space.’ E=mc2 tells us that energy (E) is an intrinsic property of matter. There is no mysterious disembodied energy available to accelerate any ‘thing’ much less accelerate the concept of space.

In failing to address these points the Nobel Committee perpetuates the lack of progress in science. We are paying untold billions of dollars for experiments meant to detect the phantoms springing endlessly from delusional theories. For example, gravitational wave telescopes are being built and continually refined in sensitivity to discover the imaginary “ripples in the fabric of space-time.” The scientists might as well be medieval scholars theorizing about the number of angels that could dance on the head of a pin. By the end of 2010, the Large Hadron Collider has now cost more than US$10 billion searching for the mythical Higgs boson that is supposed to cause all other particles to exhibit mass! Here, once again, E=mc2 shows that mass (m) is an intrinsic property of matter. It is futile to look elsewhere for a cause. In a scientific field, it is dangerous to rely on a single idea. The peril for cosmologists is clear. They have developed a monoculture; an urban myth called the big bang. Every surprising discovery must be force-fitted into the myth regardless of its absurdities. Scientists are presently so far ‘through the looking glass’ that the real universe we observe constitutes a mere 4% of their imaginary one.

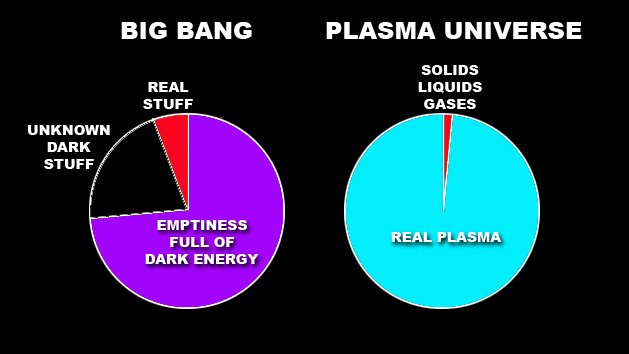

The

big bang universe where 96% of the universe is imaginary. The plasma

universe has >99% of the universe in the form of plasma and <1%

solids, liquids and neutral gases.

The most profound and important demand we must make of astrophysicists is to justify their unawareness of this freely available ‘second idea.’

‘Dark energy’ is supposed to make up 73% of the universe. The evidence interpreted in this weird way comes from comparing the redshift distances of galaxies with the brightness of their supernovae type 1a, used as a ‘standard candle.’ It was found that the supernovae in highly redshifted galaxies are fainter than expected, indicating that they are further away than previously estimated. This, in turn, implied a startling accelerating expansion of the universe, according to the big bang model. It is like throwing a ball into the air and having it accelerate upwards. So a mysterious ‘dark energy’ was invented, which fills the vacuum and works against gravity. The Douglas Adams’ “Infinite Improbability generator” type of argument was called upon to produce this ‘vacuum energy.’ The language defining vacuum energy is revealing: “Vacuum energy is an underlying background energy that exists in space even when the space is devoid of matter (free space). The concept of vacuum energy has been deduced from the concept of virtual particles, which is itself derived from the energy-time uncertainty principle.” You may notice the absurdity of the concept, given that the vacuum contains no matter, ‘background’ or otherwise, yet it is supposed to contain energy. Adams was parodying Heisenberg’s ‘uncertainty principle’ of quantum mechanics. Quantum mechanics is merely a probabilistic description of what happens at the scale of subatomic particles without any real physical understanding of cause and effect. Heisenberg was uncertain because he didn’t know what he was talking about. However, he was truthful when he wrote, “we still lack some essential feature in our image of the structure of matter.” The concept of ‘virtual particles’ winking in and out of existence defies the aforementioned first principle of physics, “Thou shalt not magically materialize nor dematerialize matter.” Calling that matter ‘virtual’ merely underscores its non-reality.

Indeed, the ‘discovery’ of the acceleration of the expanding universe is an interpretation based on total ignorance of the real nature of stars and the ‘standard candle,’ the supernova type 1a. A supernova type 1a is supposed to be due to a hypothetical series of incredible events involving a white dwarf star. But as I have shown, a supernova is simply an electrical explosion of a star that draws its energy from a galactic circuit. The remarkable brilliance of a supernova, which can exceed that of its host galaxy for days or weeks, is explained by the kind of power transmission line failure that can also be seen occasionally on Earth. If such a circuit is suddenly opened, the electromagnetic energy stored in the extensive circuit is concentrated at the point where the circuit is broken, producing catastrophic arcing. Stars too can ‘open their circuit’ due to a plasma instability causing, for example, a magnetic ‘pinch off’ of the interstellar Birkeland current. The ‘standard candle’ effect and light curve is then simply due to the circuit parameters of galactic transmission lines, which power all stars.

Spectacular arcing at a 500,000 Volt circuit breaker.

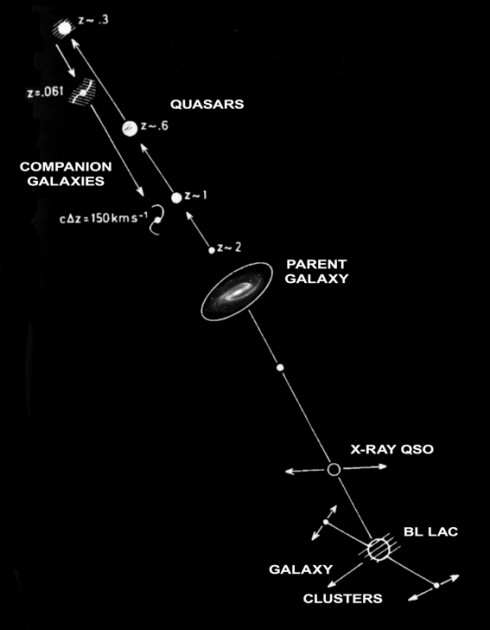

Arp's

galactic 'family tree' showing birth of quasars with high-redshift (z)

which decreases stepwise as they age and eventually form companion

galaxies and progenitors of galactic clusters.

The use of the title The Dark Side for Dr. Davis’ cosmology talk seems unconsciously apposite. It was Joseph Campbell who said, “We live our mythology.” And George Lucas attributes the success of his Star Wars films, which rely on a degenerate, evil ‘dark side,’ to reading Campbell’s books. The triumph of the big bang myth over common sense and logic supports Campbell’s assessment. And the showbiz appeal of Lucas’ mythic approach to storytelling is evident in the ‘dark side’ of cosmology. Scientists live their mythology too. Science’s “cosmic confusion” is self-inflicted.

The Electric Universe paradigm is distinguished by its interdisciplinary origin in explaining mythology by the use of forensic scientific techniques. It demands the lonely courage to give up familiar landmarks and beliefs. Sitting in the tame audience the other evening, listening to the professor of astrophysics, I was reminded of The Galaxy Song from Monty Python, which ends with the painfully perceptive lines, “And pray there is intelligent life somewhere up in space, ‘cause there’s bugger-all down here on Earth!”

Wal Thornhill

http://www.holoscience.com/wp/a-nobel-prize-for-the-dark-side/

ETHICAL DONATORS

AND COMMUNITY MEMBERS REQUIRED, TO FILL THIS

SPACE WITH YOUR POLITICAL SLOGANS, ADVERTISING OFFERS,WEBSITE DETAILS, CHARITY REQUESTS, LECTURE

OPPORTUNITIES, EDUCATIONAL WORKSHOPS, SPIRITUAL

AND/OR HEALTH ENLIGHTENMENT COURSES.

AS AN IMPORTANT MEMBER OF THE

GLOBAL INDEPENDENT

MEDIA COMMUNITY, MIKIVERSE SCIENCE HONOURABLY REQUESTS YOUR HELP TO

KEEP YOUR NEWS, DIVERSE,

AND FREE OF CORPORATE, GOVERNMENT SPIN AND

CONTROL. FOR MORE INFORMATION ON HOW YOU MAY ASSIST, PLEASE CONTACT:

themikiverse@gmail.com

Labels:

100% Independent Australian News,

mikiverse,

mikiverse headline news,

Mikiverse Hip Hop,

Mikiverse Law,

Mikiverse Politics,

Mikiverse Science

Thursday, March 7, 2013

WHAT IS METAPROGRAMMING? - THE BLOG OF J.D. MOYER SYSTEMS FOR LIVING WELL

For a number of decades I’ve been interested in self-improvement via a method I like to call metaprogramming. I was first exposed to the term via John C. Lilly’s Programming and Metaprogramming in the Human Biocomputer (a summary report to Lilly’s employer at the time, The National Institute of Mental Health [NIMH]). Lilly explored the idea that all human behavior is controlled by genetic and neurological programs, and that via intense introspection, psychedelic drugs, and isolation tanks, human beings can learn to reprogram their own computers. Far out, man. As the fields of psychology, neurophysiology, cognitive science have progressed, we’ve learned that the computer/brain analogy has its limitations. As for psychedelics, they have their limitations as well; they are so effective at disrupting rigid mental structures (opening up minds), that they can leave their heavy users a bit lacking in structure. From my own observations, what the heavy user of psychedelics stands to gain in creativity, he may lose in productivity, or stability, or coherence.

Those issues aside, I still love the term metaprogramming. We are creatures of habit (programs), and one of the most effective (if not only) way we can modify our own behavior is by hacking our own habits. We can program our programs, thus, metaprogramming. This is a slightly different use of the term than Lilly’s; what I call metaprogramming he probably would have called selfmetaprogramming (he used metaprograms to refer to higher level programs in the human biocomputer; habits and learned knowledge and cultural norms as opposed to instincts and other “hardwired” behaviors).

Effective Metaprogramming

Effective metaprogramming requires a degree of self-awareness and self-observation. It also requires a forgiving attitude towards oneself; we can more clearly observe and take responsibility for our own behaviors (including the destructive ones), if we refrain from unnecessary self-flagellation.

Most importantly, effective metaprogramming requires clear targets for behavior. In my experience, coming up with these targets takes an enormous amount of time and energy. It’s hard to decide how you want to behave, in every area of your life. It’s much easier to just continue on cruise control, relying on your current set of habits to carry you towards whatever fate you’re currently pointed at.

And what if you pick a target for your own behavior, implement it, and don’t like the results? Course corrections are part of the territory.

Religion (Do It Our Way)

If you don’t want to come up with your own set of behavioral guidelines, there’s always someone willing to offer (or sell) you theirs. Moses, lugging around his ten commandments, or Tony Robbins, with his DVDs.

Religion has historically offered various sets of metaprogramming tools; rules for how to behave, and in some cases, techniques and practices to help you out (like Buddhist meditation). If you decide to follow or join a religion, you have to watch out for the extra baggage. Some religions come with threats if you don’t follow the rules. The threats can be real (banishment from the group), or made up (banishment to Hell). Judaism is perhaps the exception; there are lots of rules but the main punishment for not following them (as far as I can tell) is that you simply become a less observant Jew.

I’m an atheist, more or less, and a fan of the scientific method and scientific inquiry. I also appreciate the work the philosopher/evolutionary biologists Daniel Dennett and Richard Dawkins, both of whom have taken up strong stands against organized religion. These stands are excusable, insofar as they attack outmoded religious beliefs (creationism, the afterlife, inferiority of women, and so forth) or crime (like the abuse of children by priests — Dawkins is actually trying to arrest the Pope). But religion offers much more than belief, and in some religions (like Judaism) belief matters very little. Religions offer behavioral systems, practices, rituals, myths, stories, and traditions, all of which are tremendous, irreplaceable cultural resources.

Some religions are attempting the leap into modernity. The Dalai Lama has taken an active interest in neuroscience. My wife’s rabbi is a self-proclaimed atheist. The Vatican has put out a statement suggesting that Darwinian evolution is not in conflict with the official doctrines of the Catholicism (a nice PR move, but in my opinion it’s only because they don’t fully understand the principles of Darwinian evolution — Daniel Dennett called Darwin’s idea “dangerous” for good reason). In the long-run, religions are institutions, and they’ll do what they have to in order to survive. The term “God” will be redefined, as necessary, to keep the pews warm and the tithing buckets full. Evolutionary biologists (with their logical, literal thinking) are tilting at windmills when they attack religion; they are no match for the nimble, poetic minds of theologians.

As much as I value religions in the abstract, I haven’t yet found one I can deal with personally. My wife finds the endless rules of Judaism to be invigorating; following them gives her real spiritual satisfaction. I find them to be bizarre and confusing (maybe this is because I’m not Jewish, but I suspect some Jews would agree with me).

Still, I have liberally borrowed from the world’s religions while devising my own metaprogramming system. Jesus’s Golden Rule. Islam’s dislike of debt. A good chunk of the Buddha’s Eightfold Path. And at least a few of the Ten Commandments.

Help Yourself

Want to win friends and influence people? Change the spelling of your last

name to match that of a world-famous captain of industry!

The modern self-help movement has had its share of both inspired individuals (like Tony Robbins) and charismatic but ultimately abusive (like the late Frederick Lenz).

I’m a fan of Robbins, for example, because his teachings are open (he does sell products and seminars, but he also gives away an enormous amount of content). Same goes for Steve Pavlina, Les Brown, and even Timothy Ferriss. All offer up their own insights and behavioral modification (metaprogramming) systems with a “try this and see if it works for you” attitude. It’s clear they are interested in spreading their message first, in making a living second, and not at all interested in controlling people or accumulating subjugates.

I’m also fascinated by the late anti-guru U.G. Krishnamurti (not to be confused with the more popular J. Krishnamurti). U.G., by all accounts, was unequivocally an enlightened being. The interesting bit was his absolute refusal to attempt to teach, pass on, or even recommend his own higher state of consciousness. Throughout his life, he refused to take on any followers or officially publish any of his writings. I’ll write about U.G. in more detail in another post.

Sinister Intentions

At the unfortunate intersection between religion and self-help lies the world of cults. Cult leaders and cult organizations can be spotted by the following attributes. Stay away!

- secret, often bizarre teachings

- brainwashing techniques (sleep deprivation, emotional trauma, isolation, sensory overload)

- enormous fees required for membership and/or access to teachings

- requirement to cut off contact from family and/or friends (nonmembers)

- use coercive methods to control their members (intimidation, blackmail, even violence)

There’s nothing wrong with using somebody else’s self-improvement/behavioral modification/metaprogramming system, either ancient or modern, in whole or in part, as long as you shop around carefully. Or, you can invent your own. As a third alternative, if you are already happy with the current state of your habits (and where they are steering you in life), you may not feel compelled to bother with changing yourself.

Baby with the Bathwater

The field of self-improvement is full of half-truths, hucksters, pseudoscience, charlatans, snake oil and snake oil salesmen, bizarre beliefs, true believers, smelly hippies, narcissistic baby boomers, pitiful cases, get-rich-quick schemers, crystal wavers, cult leaders, and weird dieters, and is thus always ripe for parody (my favorite is this video parody of The Secret). A down-to-earth, rational person could be excused for steering clear of the self-improvement realm altogether.

On the other hand, energy we invest in improving our own habits (programs), including habits of thought and perception, is probably one of the best investments we can make in our own lives. Even minor improvements can yield enormous dividends in the long-run.

I’ll continue to share my thoughts about metaprogramming in this blog, including my core metaprogramming principles (not as a prescriptive, but rather in the spirit of open-source code sharing). As a quick preview, I’ll offer that my own principles involve the following areas:

- Maintaining a High Quality of Consciousness

- Taking Radical Responsibility for All Your Actions, and Every Aspect Of Your Life

- Creating a System of Functional Vitality

ETHICAL DONATORS AND COMMUNITY MEMBERS REQUIRED, TO FILL THIS SPACE WITH YOUR POLITICAL SLOGANS, ADVERTISING OFFERS, WEBSITE DETAILS, CHARITY REQUESTS, LECTURE OPPORTUNITIES, EDUCATIONAL WORKSHOPS, SPIRITUAL AND/OR HEALTH ENLIGHTENMENT COURSES. AS AN IMPORTANT MEMBER OF THE GLOBAL INDEPENDENT MEDIA COMMUNITY, MIKIVERSE SCIENCE HONOURABLY REQUESTS YOUR HELP TO KEEP YOUR NEWS, DIVERSE,AND FREE OF CORPORATE, GOVERNMENT SPIN AND CONTROL. FOR MORE INFORMATION ON HOW YOU MAY ASSIST, PLEASE CONTACT: themikiverse@gmail.com

Labels:

100% Independent Australian News,

mikiverse,

mikiverse headline news,

Mikiverse Hip Hop,

Mikiverse Law,

Mikiverse Politics,

Mikiverse Science

THE SCIENCE OF ENERGY AND THOUGHT. SUBCONSCIOUS MIND POWER

Sunday, March 25, 2012

A scientific approach explaining the power of thought. We have all heard before, 'Your thoughts create your reality'. Well, new quantum physics studies support this idea.

Learn about recent research about how the mind can influence the behavior of subatomic particles and physical matter. If you enjoy the video, please pass it on to friends and family.

The power of our thoughts and feelings allows us to manifest our desires. The challenge is in harnessing our ever shifting perspectives so that we can focus upon the thoughts that can make a positive difference.

Working with our thoughts consciously allows our awareness and experience of life to unfold its potential. The key is to be open to change and express ourselves from a higher perspective on life.

Our past is but a memory and the future is in our imagination, right now in the present moment is our true point of power.

Dr. Joseph Murphy's book on the power of the Subconscious Mind is a practical guide to understand and learn to use the incredible powers you possess within you. GYHF

“By choosing your thoughts, and by selecting which emotional currents you will release and which you will reinforce, you determine the quality of your Light. You determine the effects that you will have upon others, and the nature of the experience of your life.” -Gary Zukav

http://www.knowledgeoftoday.org/2012/03/thought-definition-life-energy-power.html

ETHICAL DONATORS AND COMMUNITY MEMBERS REQUIRED, TO FILL THIS SPACE WITH YOUR POLITICAL SLOGANS, ADVERTISING OFFERS, WEBSITE DETAILS, CHARITY REQUESTS, LECTURE OPPORTUNITIES, EDUCATIONAL WORKSHOPS, SPIRITUAL AND/OR HEALTH ENLIGHTENMENT COURSES. AS AN IMPORTANT MEMBER OF THE GLOBAL INDEPENDENT MEDIA COMMUNITY, MIKIVERSE SCIENCE HONOURABLY REQUESTS YOUR HELP TO KEEP YOUR NEWS, DIVERSE,AND FREE OF CORPORATE, GOVERNMENT SPIN AND CONTROL. FOR MORE INFORMATION ON HOW YOU MAY ASSIST, PLEASE CONTACT: themikiverse@gmail.com

|

Labels:

100% Independent Australian News,

mikiverse,

mikiverse headline news,

Mikiverse Hip Hop,

Mikiverse Law,

Mikiverse Politics,

Mikiverse Science

Wednesday, March 6, 2013

DETECTION AND ATTRIBUTION OF CLIMATE CHANGE: A REGIONAL PERSPECTIVE

AUTHORS: Peter A. Stott1,*, Nathan P. Gillett2, Gabriele C. Hegerl3, David J. Karoly4, Dáithí A. Stone5, Xuebin Zhang6, Francis Zwiers6. Article first published online: 5 MAR 2010

DOI: 10.1002/wcc.34 Copyright © 2010 John Wiley & Sons, Inc.

Abstract

The

Intergovernmental Panel on Climate Change fourth assessment report,

published in 2007 came to a more confident assessment of the causes of

global temperature change than previous reports and concluded that ‘it

is likely that there has been significant anthropogenic warming over the

past 50 years averaged over each continent except Antarctica.’ Since

then, warming over Antarctica has also been attributed to human

influence, and further evidence has accumulated attributing a much wider

range of climate changes to human activities. Such changes are broadly

consistent with theoretical understanding, and climate model

simulations, of how the planet is expected to respond. This paper

reviews this evidence from a regional perspective to reflect a growing

interest in understanding the regional effects of climate change, which

can differ markedly across the globe. We set out the methodological

basis for detection and attribution and discuss the spatial scales on

which it is possible to make robust attribution statements. We review

the evidence showing significant human-induced changes in regional

temperatures, and for the effects of external forcings on changes in the

hydrological cycle, the cryosphere, circulation changes, oceanic

changes, and changes in extremes. We then discuss future challenges for

the science of attribution. To better assess the pace of change, and to

understand more about the regional changes to which societies need to

adapt, we will need to refine our understanding of the effects of

external forcing and internal variability. Copyright © 2010 John Wiley

& Sons, Inc.

For further resources related to this article, please visit the WIREs website

1. Introduction

Evidence for an anthropogenic contribution to climate trends over the twentieth century is accumulating at a rapid pace [see Mitchell et al. (2001) and International Ad Hoc Detection and Attribution Group (2005) for detailed reviews]. The greenhouse gas signal in global surface temperature can be distinguished from internal climate variability and from the response to other forcings (such as changes in solar radiation, volcanism, and anthropogenic forcings other than greenhouse gases) for global temperature changes (e.g., Santer et al. 1996; Hegerl et al. 1997; Tett et al. 1999; Stott et al. 2001) and also for continental-scale temperature (Stott 2003; Zwiers and Zhang 2003; Karoly et al. 2003; Karoly and Braganza 2005). Evidence for anthropogenic signals is also emerging in other variables, such as sea level pressure (Gillett et al. 2003b), ocean heat content (Barnett et al. 2001; Levitus et al. 2001, 2005; Reichert et al. 2002; Wong et al. 2001), ocean salinity (Wong et al. 1999; Curry et al. 2003), and tropopause height (Santer et al. 2003b).

The goal of this paper is to discuss new directions and open questions in research toward the detection and attribution of climate change signals in key components of the climate system, and in societally relevant variables. We do not intend to provide a detailed review of present accomplishments, for which we refer the reader to International Ad Hoc Detection and Attribution Group (2005).

Detection has been defined as the process of demonstrating that an observed change is significantly different (in a statistical sense) from natural internal climate variability, by which we mean the chaotic variation of the climate system that occurs in the absence of anomalous external natural or anthropogenic forcing (Mitchell et al. 2001). Attribution of anthropogenic climate change is generally understood to require a demonstration that the detected change is consistent with simulated change driven by a combination of external forcings, including anthropogenic changes in the composition of the atmosphere and internal variability, and not consistent with alternative explanations of recent climate change that exclude important forcings [see Houghton et al. (2001) for a more thorough discussion]. This implies that all important forcing mechanisms, natural (e.g., changes in solar radiation and volcanism) and anthropogenic, should be considered in a full attribution study.

Detection and attribution provides therefore a rigorous test of the model-simulated transient change. In cases where the observed change is consistent with changes simulated in response to historical forcing, such as large-scale surface and ocean temperatures, these emerging anthropogenic signals enhance the credibility of climate model simulations of future climate change. In cases where a significant discrepancy is found between simulated and observed changes, this raises important questions about the accuracy of model simulations and the forcings used in the simulations. It may also emphasize a need to revisit uncertainty estimates for observed changes.

Beyond model evaluation, a further important application of detection and attribution studies is to obtain information on the uncertainty range of future climate change. Anthropogenic signals that have been estimated from the twentieth century can be used to extrapolate model signals into the twenty-first century and estimate uncertainty ranges based on observations (Stott and Kettleborough 2002; Allen et al. 2000). This is important since there is no guarantee that the spread of model output fully represents the uncertainty of future change. Techniques related to detection approaches can also be used to estimate key climate parameters, such as the equilibrium global temperature increase associated with CO2 doubling (“climate sensitivity”) or the heat taken up by the ocean (e.g., Forest et al. 2002) to further constrain model simulations of future climate change.

Mitchell et al. (2001) and International Ad Hoc Detection and Attribution Group (2005)

give an extensive overview of detection and attribution methods. One of

the most widely used, and arguably the most efficient method for

detection and attribution is “optimal fingerprinting.” This is

generalized multivariate regression that uses a maximum likelihood

method (Hasselmann 1979, 1997; Allen and Tett 1999)

to estimate the amplitude of externally forced signals in observations.

The regression model attempts to represent the observed record y, organized as a vector in space and time, from a set of n response (signal) patterns that are concatenated in a matrix  using the linear assumption y =

using the linear assumption y =  β + u.

Climate change signal patterns (also called fingerprints) are usually

derived from model simulations [e.g., with a coupled general circulation

model (CGCM)]. The vector β contains the scaling factors

that adjust the amplitude of each those signal patterns (also called

fingerprints) to best match the observed amplitude, and u is a realization of internal climate variability. Vector u

is assumed to be a realization of a Gaussian random vector (see below

for discussion). Long “control” simulations with CGCMs, that is,

simulations without anomalous external forcing, are typically used to

estimate the internal climate variability and the resulting uncertainty

in scaling factors β.

β + u.

Climate change signal patterns (also called fingerprints) are usually

derived from model simulations [e.g., with a coupled general circulation

model (CGCM)]. The vector β contains the scaling factors

that adjust the amplitude of each those signal patterns (also called

fingerprints) to best match the observed amplitude, and u is a realization of internal climate variability. Vector u

is assumed to be a realization of a Gaussian random vector (see below

for discussion). Long “control” simulations with CGCMs, that is,

simulations without anomalous external forcing, are typically used to

estimate the internal climate variability and the resulting uncertainty

in scaling factors β.

Inferences about detection and attribution in the standard approach are then based on hypothesis testing. For detection, this involves testing the null hypothesis that the amplitude of a given signal is consistent with zero (if this is not the case, it is detected); attribution is assessed using the attribution consistency test (Allen and Tett 1999; see also Hasselmann 1997), which evaluates the null hypothesis that the amplitude β is a vector of units (i.e., the model signal does not need to be rescaled to match the observations). A complete attribution assessment accounts for competing mechanisms of climate change as completely as possible, as discussed by Mitchell et al. (2001). Increasingly, Bayesian approaches are used as an alternative to the standard approach. In Bayesian approaches, inferences are based on a posterior distribution that blends evidence from the observations with independent prior information that is represented by a prior distribution [e.g., Berliner et al. 2000; Schnur and Hasselmann 2004; Lee et al. 2005; see International Ad Hoc Detection and Attribution Group (2005) for a more complete discussion and results]. Since Bayesian approaches can incorporate multiple lines of evidence and account elegantly for uncertainties in various components of the detection and attribution effort, we expect that they will be very helpful for variables with considerable observational and model uncertainty.

As we move toward detection and attribution studies on smaller spatial and temporal scales and with nontemperature variables, new challenges arise that are related to noise and uncertainty in signal patterns, dealing with non-Gaussian variables and facing data limitations. These are now discussed.

There is an increasing amount of observational evidence for changes within the ocean, both at regional and global scales (e.g., Bindoff and Church 1992; Wong et al. 1999; Wong et al. 2001; Dickson et al. 2001; Curry et al. 2003; Levitus et al. 2001; Aoki et al. 2005). Many of the observed changes in the ocean are from studies of the heat storage (Ishii et al. 2003; White et al. 2003; Willis et al. 2004; Levitus et al. 2005).

These studies all show that the global heat content of the oceans has

been increasing since the 1950s. For the period 1993–2003, this increase

is between 0.7 and 0.86 W m−2. The longer-term average increase in heat content (1955–98) over the 0–3000-m layer of the ocean is 0.2 W m−2

or 0.037°C. These observed changes in ocean heat content are consistent

with model-simulated changes in state-of-the-art coupled climate

models, which can be detected and attributed to anthropogenic forcing

(e.g., Barnett et al. 2001; Levitus et al. 2001; Reichert et al. 2002). However, total ocean heat content is affected by observational sampling uncertainty (Gregory et al. 2004). Since the ocean is a major source of uncertainty in future climate change (see Houghton et al. 2001),

attempting to detect and quantify ocean climate change in variables

focusing on ocean physics, such as water mass characteristics, will

increase confidence in large-scale simulations of climate change in the

ocean and our ability to simulate future ocean changes.

The water mass characteristics of the relatively shallow Sub-Antarctic Mode Water (SAMW) and the subtropical gyres in the Indian and Pacific basins since the 1960s have been changing. In most studies differences between earlier historical data (mainly from the 1960s) with more recent World Ocean Circulation Experiment (WOCE) data in the late 1980s and 1990s show that the SAMW is cooler and fresher on density surfaces (Bindoff and Church 1992; Johnson and Orsi 1997; Bindoff and McDougall 1994; Wong et al. 2001; Bindoff and McDougall 2000), indicative of a subduction of warmer waters [see Bindoff and McDougall (1994) for an explanation of this counterintuitive result]. These water mass results are supported by the strong increase in heat content in the Southern Hemisphere midlatitudes across both the Indian and Pacific Oceans during the 1993–2003 period (Willis et al. 2004). While most studies of the SAMW water mass properties have shown a cooling and freshening on density surfaces in the Indian and Pacific Oceans, the most recent repeat of the WOCE Indian Ocean section along 32°S in 2001 found a warming and salinity increase on density surfaces (indicative of subduction of cooler waters) in the shallow thermocline (Bryden et al. 2003). This result emphasizes the need to understand the processes involved in decadal oscillations in the subtropical gyres. Note, however, that the denser waters masses below 300 m showed the same trend in water mass properties that had been reported earlier (Bindoff and McDougall 2000). Further evidence of the large-scale freshening and cooling of SAMW (Fig. 3) comes from an analysis of six meridional WOCE sections and three Japanese Antarctic Research Expedition sections from South Africa to 150°E. These sections were compared with historical data extending from the Subtropical Front ( 35°S) to the Antarctic Divergence (

35°S) to the Antarctic Divergence ( 60°S),

and from South Africa eastward to the Drake Passage. In almost all

sections a cooling and freshening of SAMW has occurred consistent with

the subduction of warmer surface waters observed over the same period,

summarized in Fig. 3.

60°S),

and from South Africa eastward to the Drake Passage. In almost all

sections a cooling and freshening of SAMW has occurred consistent with

the subduction of warmer surface waters observed over the same period,

summarized in Fig. 3.

The salinity minimum water in the North Pacific has freshened and in the southern parts of the Atlantic, Indian, and Pacific Oceans there has also been a corresponding freshening of the salinity minimum layer. The Atlantic freshening at depth is also supported by direct observations of a freshening of the surface waters (Curry et al. 2003). Taken together these changes in the Atlantic and North Pacific suggest a global increase in the hydrological cycle (and flux of freshwater into the oceans including melt waters from ice caps and sea ice) at high latitudes in the source regions of these two water masses (Wong et al. 1999). To the south of the Subantarctic Front, there is a very coherent pattern of warming and salinity increase on density surfaces <500 m (Fig. 3). This pattern of warming and salinity increase on isopycnals from 45°E to 90°W is consistent with the warming and/or freshening of surface waters (see Bindoff and McDougall 1994). Figure 3 summarizes the observed differences in the Southern Ocean, showing the cooling and freshening on density surfaces of SAMW north of the Subantarctic Front, freshening of Antarctic Intermediate Water, and warming and salinity increase of the Upper-Circumpolar Deep Water south of the Subantarctic Front.

These observed changes are broadly consistent with simulations of warming and changes in precipitation minus evaporation. Banks and Bindoff (2003) identified a zonal mode (or fingerprint) of difference in water mass properties in the anthropogenically forced simulation of the HadCM3 model between the 1960s and 1990s (Fig. 4). This fingerprint identified in HadCM3 is strikingly similar to the observed differences in water mass characteristics (Fig. 3) in the Southern Hemisphere. In the HadCM3 climate change simulation, the strength of the zonal mode in the Indo-Pacific Ocean tends to become stronger and increasingly significant from the 1960s onward. Its strength exceeds the 5% significance level 40% of the time, while this happens only occasionally (<5% of the time) in the 600-yr control simulation. This result suggests that the zonal signature of climate change for the Indo-Pacific basin (and Southern Ocean) is distinct from the modes of variability and suggests that the anthropogenic change can be separated from internal ocean variability. The similarity of observed and simulated water mass changes suggests that such changes can already be observed. For a quantitative detection approach, the relatively sparse sampling of ocean data needs to be emulated in models.

Results

of detection and attribution studies in surface and atmospheric

temperature and ocean heat content show consistently that a large part

of the twentieth-century warming can be attributed to greenhouse gas

forcing. We need to continue to attempt estimating the climate response

to anthropogenic forcing in different components of the climate system,

including the oceans, atmosphere, and cryosphere. We also need to more

fully assess all the components of the climate system for their

sensitivity to climate change signals and their signal-to-noise ratios

for climate change, and synthesize estimates of anthropogenic signals

from different climate variables. Also, detection studies are now